INT2 becomes the Elite Partner of Graphcore in Viet Nam

Intelligent Integration Co., Ltd. (INT2), the leading HPC/AI system integrator in Viet Nam, and the Bristol-based start-up Graphcore (United Kingdom) signed the reseller agreement on July 15, 2021 to let INT2, to be the official reseller of Graphcore’s products, IPU-M2000, IPU-POD16, IPU-POD64, and so on, in Viet Nam. In addition, Graphcore, via INT2, also provides the Academic Programme for scientists in universities, institutes and national laboratories in Viet Nam to access to the advances of IPU for their research for free, up to 6 months on the Graphcore Cloud.

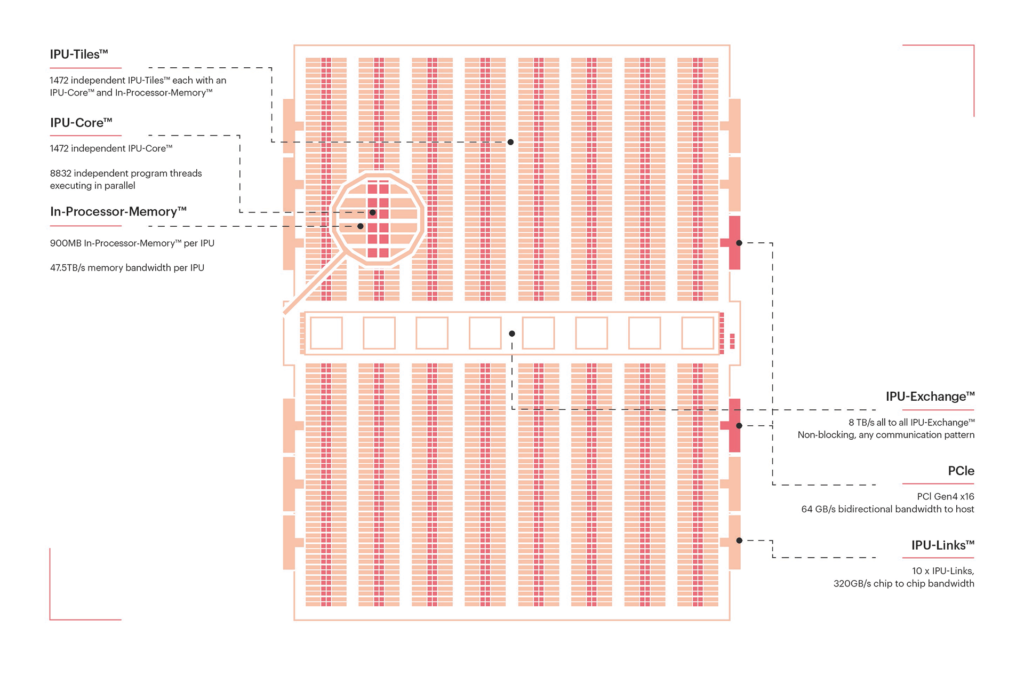

The IPU is a completely new kind of massively parallel processor, co-designed from the ground up with the Poplar® SDK, to accelerate machine intelligence. Since our first generation Colossus IPU, we have developed groundbreaking advances in compute, communication and memory in our silicon and systems architecture, achieving an 8x step up in real-world performance compared to the MK1 IPU. The GC200 is the world’s most complex processor made easy to use thanks to Poplar software, so innovators can make AI breakthroughs.

As an AI Developer,

You are looking to break new ground, find better efficiencies and iterate faster. IPU-POD16 is your perfect platform to experience a brand new approach to AI innovation and execution. While IPU-POD16 offers a radically different approach to AI compute under the hood, you still get to use standard frameworks and tools like TensorFlow and PyTorch. The Poplar SDK provides a full suite of software and libraries to support your AI development.

As an IT leader,

Simple to deploy: IPU-POD16 is a plug-and-play system, directly attached to a choice of approved host servers, delivering impressive AI compute for both training and inference. High-compute density in the smallest 5U footprint and a flexible, modular system simplifies capacity planning and minimizes cost of scale-out. The core IPU-M2000 building blocks and host server can easily be re-configured as part of larger, switched IPU-POD datacenter systems in the future. And if you want to allocate IPU-POD16 compute resource to several users or more than one task, our Virtual IPU-POD enables multi-tenancy.

As a Business Leader,

Where is your next leadership advantage coming from? IPU-POD16 enables your team to experiment and innovate like never before. Their innovations with IPU-POD will give you the advantage, whether you are chasing better revenues or cutting-edge industry breakthroughs.

For more information on Graphcore’s product, please contact us at [email protected].